- 长臂猿-企业应用及系统软件平台

Google Deepmind 可能早就意识到了这个问题。

点击领取

👉ChatGPT独享账号

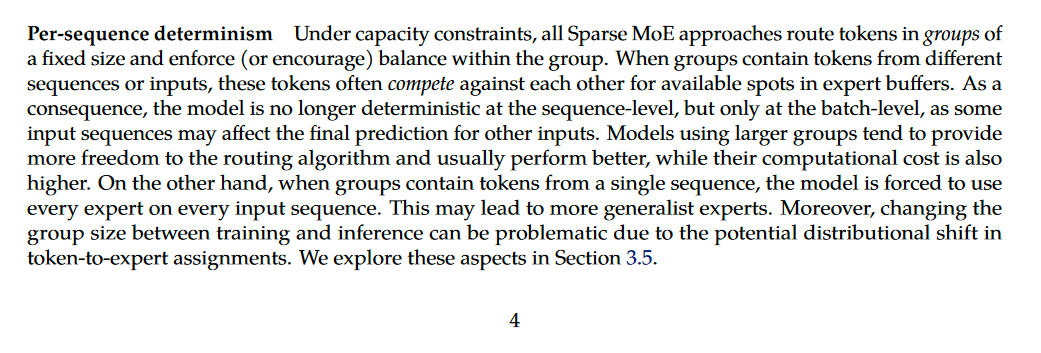

在容量限制下,所有稀疏 MoE 都以固定大小的组来路由 token,并强制(或鼓励)组内平衡。当组内包含来自不同序列或输入的 token 时,这些 token 通常会相互竞争专家缓冲区中的可用位置。因此,模型在序列级别不再具有确定性,而仅在批次级别(batch-level)具有确定性,因为某些输入序列可能会影响其他输入的最终预测。

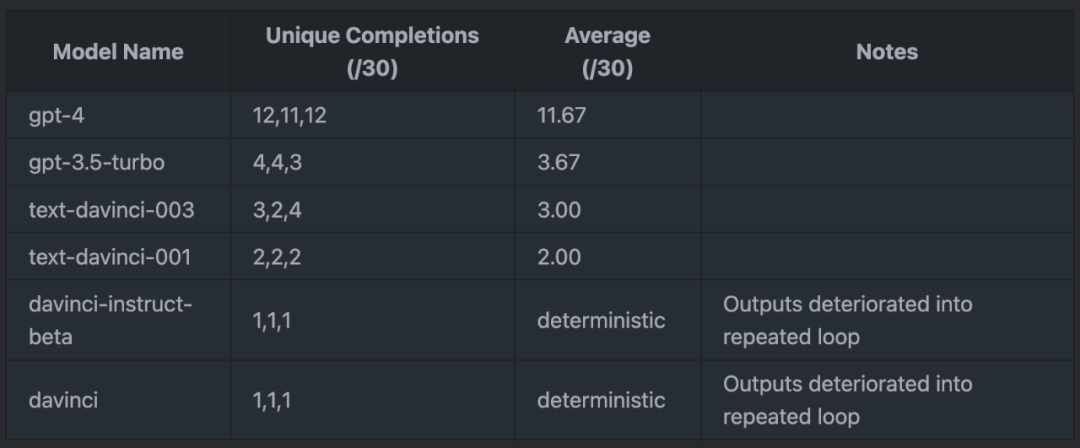

GPT-4 API 用执行批推理(batch inference)的后端来托管。尽管一些随机性可能是因为其他因素,但 API 中的绝大多数不确定性是由于其稀疏 MoE 架构未能强制执行每个序列的确定性。

也就是说,Sherman Chann 假设:「稀疏 MoE 模型中的批推理是 GPT-4 API 中大多数不确定性的根本原因」。为了验证这个假设,Sherman Chann 用 GPT-4 编写了一个代码脚本:

import osimport jsonimport tqdmimport openaifrom time import sleepfrom pathlib import Pathchat_models = ["gpt-4", "gpt-3.5-turbo"]message_history = [{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": "Write a unique, surprising, extremely randomized story with highly unpredictable changes of events."}]completion_models = ["text-davinci-003", "text-davinci-001", "davinci-instruct-beta", "davinci"]prompt = "[System: You are a helpful assistant]\n\nUser: Write a unique, surprising, extremely randomized story with highly unpredictable changes of events.\n\nAI:"results = []import timeclass TimeIt:def __init__(self, name): self.name = namedef __enter__(self): self.start = time.time()def __exit__(self, *args): print(f"{self.name} took {time.time() - self.start} seconds")C = 30 # number of completions to make per modelN = 128 # max_tokens# Testing chat modelsfor model in chat_models:sequences = set()errors = 0 # although I track errors, at no point were any errors ever emittedwith TimeIt(model):for _ in range(C):try:completion = openai.ChatCompletion.create(model=model,messages=message_history,max_tokens=N,temperature=0,logit_bias={"100257": -100.0}, # this doesn't really do anything, because chat models don't do <|endoftext|> much)sequences.add(completion.choices[0].message['content'])sleep(1) # cheaply avoid rate limitingexcept Exception as e:print('something went wrong for', model, e)errors += 1print(f"\nModel {model} created {len(sequences)} ({errors=}) unique sequences:")print(json.dumps(list(sequences)))results.append((len(sequences), model))# Testing completion modelsfor model in completion_models:sequences = set()errors = 0with TimeIt(model):for _ in range(C):try:completion = openai.Completion.create(model=model,prompt=prompt,max_tokens=N,temperature=0,logit_bias = {"50256": -100.0}, # prevent EOS)sequences.add(completion.choices[0].text)sleep(1)except Exception as e:print('something went wrong for', model, e)errors += 1print(f"\nModel {model} created {len(sequences)} ({errors=}) unique sequences:")print(json.dumps(list(sequences)))results.append((len(sequences), model))# Printing table of resultsprint("\nTable of Results:")print("Num_Sequences\tModel_Name")for num_sequences, model_name in results:print(f"{num_sequences}\t{model_name}")

▶程序员干活太快,会不会被卸磨杀驴?

▶ 孤注一掷

▶ 每月 200 美元便可 AI“刀”人?黑客版 ChatGPT 风靡暗网

▶ 飞天敦煌

来都来了,点个在看再走吧~~~